We’ve been led to believe that we are at the precipice of an Artificial Intelligence explosion.

Yet the progress in our digital assistants and self-driving cars seems to have stalled. Computers choke at even the simplest requests, and most of the digital world continues to be handcrafted by humans.

But quietly, hidden in plain sight, another revolution is already in full swing. It has somehow escaped public scrutiny and won’t be found dominating the news with dramatic headlines. The most profound invention of our time isn’t AI. It is AT – Artificial Time.

A recent article by Venkatesh Rao called Superhistory, not Superintelligence opened my eyes to what’s happening.

Human civilization has always been limited by our ability to process information. From the scale of individual human lives to the largest institutions, it has always been tremendously costly to store information. Our communication abilities have been limited to cave drawings, stone tablets, heiroglyphics, and fragile paper with inexact symbols. All of it at risk of being lost, damaged, or stolen.

Since it wasn’t possible to store everything, we had to decide what was worth saving. Our history is therefore lossy – full of holes, mistakes, and misinterpretations.

Our ability to process information has also been severely limited. Human brains were the only form of computation available, which meant that we had to turn information into tiny, manageable bricks to be loaded up into our minds before any thinking could be done about it.

With the invention of modern, digital technology, these bottlenecks were unlocked. The rise of Big Data allowed us to store everything, and figure out what to do with it later. A more recent and even more powerful invention – machine learning – now allows us to analyze and draw conclusions from more data than any number of humans could ever comprehend.

It is this second invention that has truly unlocked the ability of thinking machines to manipulate time. And we are just beginning to learn how to harness it.

Human-machine learning

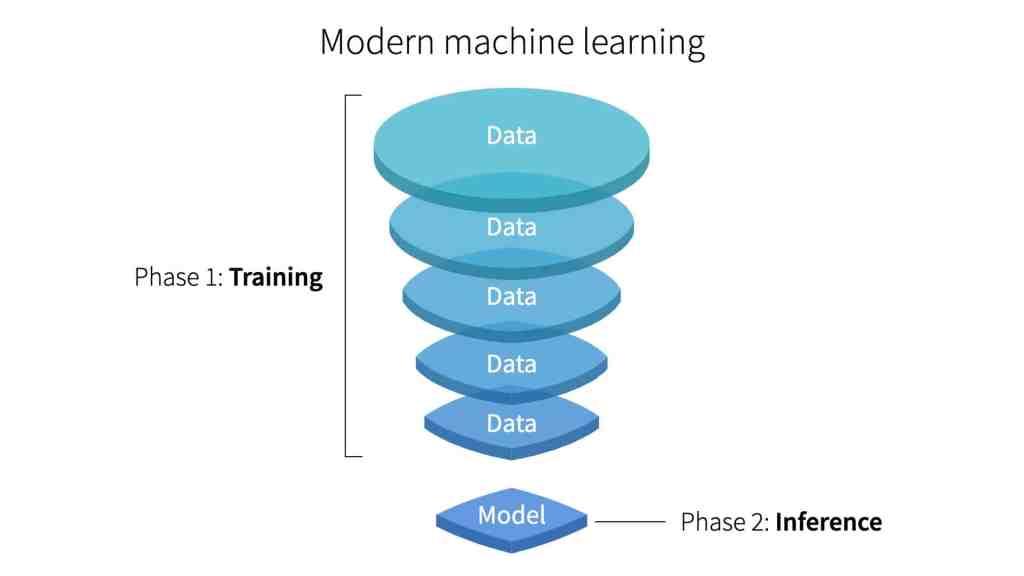

Modern machine learning works in two stages: first, there is a “training” phase, followed by an “inference” phase.

The training phase is computationally expensive – it takes a lot of resources. Algorithms digest huge amounts of data and turn it into models for specific kinds of tasks.

For example, models for natural language processing (which Siri uses to understand your requests), image recognition (which Instagram uses to recognize the faces of your friends), and recommendations (used by Netflix to guess what you’ll want to watch next).

The inference phase, on the other hand, is computationally cheap – it takes little computing power. The models developed in the training phase are applied to new situations they haven’t seen before. The machine learning algorithm is trained in the first phase, and in the second phase, it uses that training to make decisions.

The most important takeaway for our purposes is to understand that the training phase requires the most powerful computers in the world, and involves a volume of data that is orders of magnitude beyond what any human could ever make sense of. It would take hundreds or thousands of human lifetimes to put in an equivalent amount of cognitive effort.

But the inference phase is very different. It involves relatively simple models scaled down to human size. Once you have a useful model, it can be run within seconds or minutes on the cheapest laptops or smartphones.

Or even, in some cases, on a biological human brain.

The king of chess

In 2013, the world of international chess was shocked by an upset.

A young 22 year-old prodigy from Norway, Magnus Carlsen, defeated the reigning world champion, Viswanathan Anand of India, in a series of 10 spellbinding games.

The common wisdom has long said that older chess players win on experience over younger, more talented ones. They might not have the raw intellectual horsepower, but they’ve seen more games over more years. The distilled wisdom of all that experience is supposed to enable them to see opportunities and risks their opponents can’t.

But when Carlsen emerged victorious, something different was on display. Carlsen was the first chess world champion to have trained primarily against sophisticated chess AIs available on personal computers as he grew up in the 2000s.

As a result, his playing style was different from any human that came before him. He would push hard all the way to the end, exploring unconventional lines of play when humans would normally have given up. He wasn’t bound by the traditions and conventions passed down from one generation of chess grandmasters to the next.

In a very real sense, Carlsen is an AI-augmented player. He might not receive the direct assistance of a computer during the matches, but his internal chess-playing instincts draw on the “models” he’s developed over thousands of matches against the world’s best chess computers.

Those models are the distilled result of thousands of years of chess-playing experience. They aren’t limited by physical laws or the passage of time – a chess algorithm can be pitted against itself and play countless games in parallel, continuously, at speeds unfathomable to living organisms.

It is as if the computer travels at light speed into the future and then comes back to our time to share with us the lessons it’s discovered.

In a sense, Magnus Carlsen wasn’t 22 years old when he became the world champion. In terms of games played and experience assimilated, he was more like 200 years old. And with the continued development of even more sophisticated chess algorithms, whoever eventually defeats him might be 2,000 years old.

Augmenting human intelligence

The Carlsen example shows that it is not the raw intellectual power of our technology that matters. Even the best chess algorithm in the world can’t match a 2 year-old child’s ability to move through the world.

The power of technology comes from its ability to escape historical time. Algorithms can accelerate time and learn on a vastly steeper learning curve than even the smartest human could attain.

Luckily, this is not a zero-sum proposition. For now, computers remain under our control. By attaching them to our bodies and minds as “cognitive prosthetics,” we can raise our effective experiential age and go beyond the limits of time ourselves.

This is already happening every day.

Every time you use Google to look up the answer to a question that your grandmother would have simply resigned herself to never knowing, you “data-age” at an exponential pace. We used to have to wait for answers to arrive in due course. Now we simply accelerate time and pull them from the future forward into the present.

Every time you use an auto-complete writing tool like TextSpark.ai you are drawing on a vast pool of reading and writing experience equivalent to hundreds if not thousands of years of human life experience. Such tools could allow you to write like no human has ever written before.

Every time you use a digital notetaking or knowledge management tool (which I call a Second Brain), you are pushing and pulling bits of information through Artificial Time. Instead of drawing from only the immediately available lessons of the recent past, you are harnessing the intellectual output of years. You are not only smarter, but experientially wiser.

Escaping history

What all this means is that our technology isn’t just a few decades old. Measured in experiential time, computers are ancient oracles spanning thousands of years. The pyramids of Egypt are mere babies next to GPT-3.

AIs have escaped natural time, and are now sensing, recording, generating, and digesting history at the rate of decades or centuries per week. We are learning how to inject this Artificial Time into our own brains, adding 100 years of experience for every year we live.

You are not living as a witness to the rise of Artificial Intelligence. You are living as an agent augmented by Artificial Time. You are living at the end of history, and entering an accelerated future created by machines.

Thank you to Matthias Frank, Molly Fisher, H.P. Arvez, Abdur Rahman, Trent Hamm, and Brian Wallenfelt for their feedback and suggestions on this piece.

Follow us for the latest updates and insights around productivity and Building a Second Brain on Twitter, Facebook, Instagram, LinkedIn, and YouTube. And if you’re ready to start building your Second Brain, get the book and learn the proven method to organize your digital life and unlock your creative potential.